Academic Careers vs. Industry Careers

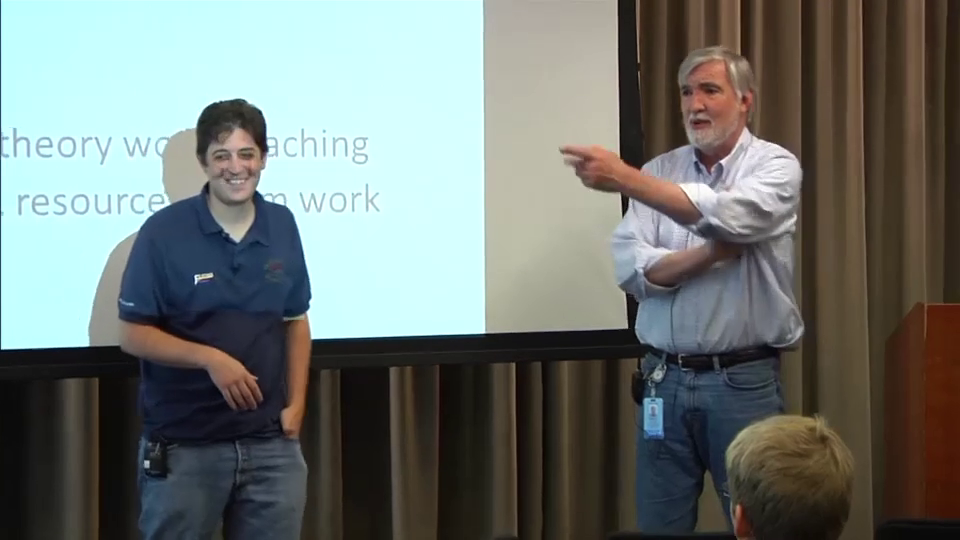

The following is an edited transcription of a Q&A session titled "Academic Careers vs. Industry Careers" given by Greg Duncan and Guy Lebanon to summer interns at Amazon in the summer of 2014. Some of the content is specifically aimed at the fields of machine learning, statistics, and economics. The content does not reflect the official perspective of Amazon in any way.

Greg: I am the Chief Economist and Statistician in GIP. I do a variety of things and depending on what day you are talking to me I'm one of an economist, a statistician, a machine learner or a data scientist. I have a PhD in economics, an MS in statistics, and a BA in economics and English. I work on a variety of statistical and forecasting issues in our Global Inventory Planning process, and I do data science consulting generally within Amazon. I am responsible for the algorithm that powers the buy box on Amazon's web site.

I have 40 years of experience in data science, economics, and statistics. I was a full professor in both economics and statistics and had faculty appointments at Northwestern (6 years), UC Berkeley (12 years), WSU (9 years), Cal Tech (2 years), and USC (3 years). Currently, I have a part time appointment at UW's economics department in addition to my full time position at Amazon. I've had joint positions between industry and academia for most of my career. I've supervised more than 20 PhD dissertations and 18 of them are full professors, some of them in good places: CMU, Maryland, UCSD, and the University of Wisconsin. I have an early 1980 paper that lays out map reduce and mini-batch SGD (though using other names).

I quit publishing in 1989 since I was at Verizon and GTE labs and they didn't allow publishing. I was the chief scientist at GTE lab and I led the team that designed the spectrum auction algorithm for the Verizon wireless network. I also designed and fielded hundreds of consumer preference surveys. I then transitioned to consulting and worked at National Economics Research Associates (NERA; a Marsh McLennan firm) as senior VP and management committee member. Later I was Managing Director at the consulting firms Huron and Deloitte, and a Principal at the Brattle consulting group. I then retired, but about two years ago I came out of retirement to join Amazon.

Guy: I grew up and went to college in Israel. I came to the US in 2000 for a PhD at Carnegie Mellon University. I graduated in 2005 with a thesis in the area of machine learning. It was clear to me since my sophomore year in college (1997) that I wanted to be a professor. I've worked towards that goal in a very focused way from 1997 to my PhD graduation in 2005. I was lucky to get a faculty job right after graduation and I joined Purdue as an assistant professor in 2005. Due to a two-body situation and geographical constraints I decided to move in 2008 and I ended up at at Georgia Tech. I got tenure and promotion to associate professor at 2012 and then went on a one year sabbatical at Google. At the end of my sabbatical my wife and I decided to stay on the west coast and I decided to stay in industry, but move to Amazon rather than stay at Google.

Q: What are your main messages about careers in industry vs. careers in academia?

Greg: Here are my main points:

(a) There is a lot more freedom in academia than in industry, but the problems are not as interesting.

(b) The stuff you do in industry ends up actually being used.

(c) Industry jobs pay well, while academic jobs do not.

Guy: First, I would like to point out that there is no objective right choice here. There are pros and cons and the right answer depends on the person, and possibly even the specific time in a person's life. For example, for me the right choice in 2005 was to join academia, but in 2013 it was to move to industry.

The main pros of academic jobs are:

(a) Freedom. Despite what some recent blogs say about professors not being completely free due to their need to publish and get grants, there is much more freedom in academia than in industry. This is true for young faculty members, but it is especially true for senior faculty members. They do not report to a manager in the same sense that they would in industry. As long as they remain somewhat active in publishing and grants, they can work on whatever they want.

(b) Theory. Academic jobs are much more suited than industry jobs for people who want to work on theory. This is true for pure mathematicians and for CS theorists, but it is also true for people who mix theory and algorithms.

(c) Teaching. Not everyone likes to teach, but if you do then this is a huge pro. I like teaching and I felt a lot of satisfaction from teaching students of all levels.

The main pros of industry jobs are:

(a) Big Impact. A few people can accomplish something truly big and important on their own. Albert Einstein comes to mind. The other 99% need a lot of help, especially in computer science. The help includes a team, proprietary data, and massive computing resources that are all generally unavailable in academia. I want to clarify that I'm talking about really big accomplishments that revolutionize our world, for example, the Internet, cloud computing, smart-phones, and e-commerce. These revolutions all required a lot of people working together and a lot of financial support. In academia you can develop some theory that will influence these revolutions to some degree but it is very rare to actually be the main driver of these revolutions.

(b) Teamwork. Academic work is pretty solitary. Professors meet periodically with their students and colleagues, but it doesn't come close to the teamwork that you find in industry. Some people prefer to work alone, and that is fine (notice my point above though that real impact requires teamwork). But for people that enjoy being a part of a team or leading a team this aspect of industry can be a big pro.

(c) Two Body Careers and Geography. In the high-tech industry it is fairly easy to find a good job in many regions. For example, you can find many good opportunities in the US at San Francisco, the bay area, Seattle, New York City, Los Angeles, Boston, and Chicago. Some of these locations have more opportunities than others, but a good candidate can find good options in any of the above locations, and many other locations as well. In academia, a typical applicant sends 10–50 applications all over the country, and if they are lucky they get one or two offers — likely in places that are not their top choices. This is a problem for the professor, but it is an even bigger problem for the professor's spouse, who is likely to have a separate geographic preferences.

(d) Compensation. Compensation is much better in industry than academia. The compensation gap is minimal right after PhD graduation, but it widens significantly with time. It depends on many factors, but is quite possible for the pay gap to grow to more than 100% after 10 years (possibly significantly more than 100%). Many young PhD graduates don't consider this a priority right away, but this becomes a bigger priority later on when the graduates plan on having kids, buying a house, etc.

(e) Opportunities (see next paragraph).

Workplace and role stability is much higher at academia and it can be both a pro and a con. It is a pro since it is nice to not worry about what happens next and being able to make long term plans. It is a con since stability is negatively correlated with new opportunities. In industry people frequently leave and roles change and this can often lead to exciting new opportunities. For example, my immediate manager at Amazon left a few months after I started. This caused some unexpected issues for me, but things turned out very well at the end — I got an opportunity to interact directly with Amazon's leadership team. Industry is full of opportunities, but one has to be ready for some amount of instability.

There are two stories that I want to mention next that are relevant for this discussion.

Story 1: As a professor and graduate student, I noticed that professors often "brainwash" graduate students that academic jobs are the best path forward for the best students, and that industry jobs should only be entertained if a faculty search is unsuccessful (or is unlikely to be successful). Professors convey this message to their students since they believe it to be true (after all they ended up being professors because that is what they believe). But there is an added incentive for professors to do that: placing your graduate students as faculty in top departments increases your reputation and your department's reputation and ranking. Indeed, the placement of graduate students as professors in good schools is a key component in how professors and departments are evaluated (both formally and informally). I personally witnessed department chairs and deans urging professors to push their top students to faculty jobs rather than industry.

While at school, graduate students receive this message from their thesis advisors and other professors they look up to. I want to offer here a different perspective and hopefully help people understand the relative pros and cons so they can make the best decision for themselves.

Story 2: A couple of years ago after getting tenure at Georgia Tech, I had a minor midlife crisis. I could choose to proceed in many different paths as a tenured professor, but I didn't know what to choose. I could focus on teaching or writing a book; I could keep writing research papers in my current area, or switch to a different research area. I started writing a book. I studied for some time new research fields (computational finance and renewable energy) — thinking that I may want to change my research direction. I eventually understood that the main question I need to answer is: "what do I want to do for the rest of my life?" This question is highly related to the question "looking back from retirement, what accomplishments will I consider to have been successful?"

The key insight for me was: publishing papers, having others cite my work, and getting awards is simply not good enough when the alternative is improving the world. In the future, when my kids ask me what I did during my long work days I want to point to more than a stack of papers, decent pay, and a length CV. I want to say that I played a big role in helping society and changing the world for the better. The chance of doing that in industry is much bigger than in academia. We are living in a unique age of increasingly fast revolutions: computers, Internet, e-commerce, smartphones, social networks, e-books. These recent revolutions were all driven by companies like Amazon, Apple, Google, Facebook, and Microsoft. We'll see many more revolutions in our lifetime including wearable devices, self-driving cars, online education, revolutions in medicine, and more. I do not mean to say that I can change the world by myself in a couple of years, but with the help of colleagues and over the period of several years it is possible.

Greg: I found most of my research topics in the nexus of computer science, economics, statistics, applied math, and psychology. At Amazon these areas are like tectonic plates hitting one another. Economists can do some things, statisticians can do other things, and data scientists can do other things. If you are in academia, it is not easy to work in that nexus. Many colleagues in the statistics department would say that is nice work but it is not really statistics stuff. The same thing would happen in computer science and economics departments. I find working in that nexus to be very exciting. It isn't clear what to do, but you realize that there is a problem and no one knows how to work on it. Another related issue is that in industry you need to solve a specific business problem, not a related problem as is often done in academia. It's a kind of freedom, but it is also a restriction. It's like writing a sonnet — you can do anything you want as long as you maintain its form.

I really like working at Amazon. Another place I had a lot of fun was Verizon where I had a lot of impact. When I see people buying stuff from Amazon I can point and say I helped make this work well. Similarity, when I see people using Verizon phones I can say I helped develop that technology.

In my area of economics and statistics you probably want to go to academia first, have your midlife crisis, and then move to industry. My mid life crisis, by the way, was having three kids preparing to go to college and realizing that even a senior tenured professor at a good university can't send them to college and can't buy a house. Perhaps an industry postdoc position would work well, in that people don't go straight to a faculty job but would have some industry experience first. Unfortunately, that does not happen frequently in economics or statistics.

Q: What limitation does an industry job places on future jobs. What if you choose industry first and then have a mid life crisis and want to move to academia?

Greg: In economics or statistics that would be a killer. However, I think in a growing field like data science perhaps you can switch to academia after industry. I think it is important to keep a connection with universities even when you are in industry. I have done that for much of my career. In fact, I recently taught a course on data science and I put the syllabus on the web. I got many requests from academics asking me to come and teach for their departments since they are trying to start an ML group.

Guy: This would not be a big deal if you are going to industry for a short amount of time (1–3 years) and then apply for faculty jobs. If you are in industry longer and want to keep the option of moving to academia you need to find a place that allows and even encourages publishing. You don't have to publish like crazy but you need to keep publishing. I also think that sometimes the most interesting papers come from industry since they work at a scale that academics don't have access to. Such papers are worth more than typical academic papers experimenting on standard public datasets and will be very valuable if you later apply for faculty jobs.

In machine learning, cutting edge is increasingly done in industry since academics don't have access to the really interesting data, evaluation methods, and computational resources. It is already impossible for academics to reproduce large-scale ML experiments described in industry papers and build upon them and this will become more common in the future. Once this happens more frequently, algorithmic and applied ML innovation will occur primarily in industry.

Greg: I want to amplify that. I get a lot of requests from former colleagues of mine that ask me to give them interesting Amazon data so they can work on it. The answer is usually no and not just at Amazon — Google and other companies would usually say the same thing. In industry you have access to datasets that academics can only dream of. You will be picking up stuff that is quite relevant and the market values that human capital significantly. Sometime universities hire industry veterans — for example USC does that. These veterans may not have PhD degrees even, but they have experience that is extremely valuable and generally unavailable in academia.

Guy: I want to mention a related issue. In academia the mindset is usually you join a department and you stay there until retirement. Perhaps you move from one university to another once in the middle of your career. In high-tech industry the mentality is very different these days. Many people join a company and then move after a few years, and then move again. Transitioning form one company to another is especially easy if you live in a high-tech hub like the bay area, Seattle, or New York City, but it happens in other places as well. As a result, young graduates should not feel that choosing between different industry options is a huge decision that will design their entire career without a possibility to backtrack from it.

Greg: That is a good point. In academia I can't tell you the number of times someone made me an offer and I said no I'm not interested, and then something changed and I called back after two years expressing interest and they said sorry — we can't reissue that offer at this point. That absolutely doesn't happen in industry. It's almost like fishing — we didn't get you this year but we'll try again next year. Maybe you will be upset for some reason and we'll be able to get later. The key thing is we are in a growing and expanding industry and as a consequence experienced people are in high demand.

Q: If you knew you were going to industry anyway would you still get a PhD and would you do anything different?

Guy: That is a very good question and if you look at the web there are a lot of blog posts discussing whether a PhD is worth it if you end up in industry. It's a parallel discussion to the discussion of academic vs. industry careers in that there are pros and cons and no right or wrong answer. It depends on the person, and the specific mindset they have at that point in their life.

In my case, I definitely do not regret doing the PhD. Doing a PhD means losing considerable money (often in terms of opportunity cost), but the way I see it one should optimize for their long-term career rather than for a 5–10 years horizon. If you do a PhD and move to industry, in some sense you lose 5 years, but there will be enough time in your future 30–40 years to make up for that financially and otherwise. The PhD experience will likely be the only time in your life where you can look at a problem and study it and become a real expert at it without distractions. In industry you have distractions like needing to launch a product or fixing an urgent problem, and you may not be able to learn fundamental skills that take years to develop. For example, a deep understanding of machine learning requires deep knowledge of statistics, probability, real analysis, linear algebra, etc. If you are working on an ML product in industry you just don't have the time and peace of mind to dive deep and properly learn all these areas. I also think it is an enjoyable experience and it shapes your personality in some way. There is plenty of time afterwards to worry about product launches and changing the world.

Greg: I have a different perspective. In economics you really need a PhD and in statistics it is getting to the point where you really need a PhD to do scientific work. The reason is that we have a mature discipline now. In the 1950s a very smart creative MS or even BS could become a professor. These days I can pretty much tell who has a PhD and who doesn't based on the way they think. In my area, when you get to the MS level you feel like know everything, and everyone at that level knows everything. If you do a PhD, your thesis advisor says tell me something we have never heard of. You have to demonstrate your creativity and you have to learn how to be creative and solve problems that are ambiguous and aren't well defined. The best theses are in areas where people may not even know that there are problems and as a consequence people are doing things incorrectly. Such significant creativity does not usually come from people that didn't get a PhD since they didn't develop that mindset. And so not having a PhD stands out much more than 60 years ago.

Guy: I agree with Greg, but I don't think it is quite as pronounced in computer science. You do normally need a PhD to be a scientist, but there are a lot of exciting innovations that can be accomplished without a PhD experience. I also want to say that being a scientist is not the only way to do exciting things and make a significant impact. You can be an engineer, a product manager, a people manager, UX designer and you can do amazing things that change the world.

Greg: I agree. I was referring to a research scientist working in areas of statistics or economics. Also, if you want to go the business route a PhD is not very relevant — you should get an MBA instead.

Q: What about publication policy at Amazon and at other companies?

Greg: If you are doing something that is very relevant to Amazon the last thing you want is to publish it and have a competitor get it. At Verizon and GTE lab we had a no or minimal publication policy. We thought Bell labs messed up and gave their stuff away. On the other hand, people who are starting their careers do need to publish, and I am sympathetic to balancing the need for having people know about you and your work. Early in your career it is important to publish, which is why I think it makes sense to have an academic job first.

Guy: I think companies should publish to some extent, assuming their business interests are maintained. Many companies including Amazon have a publication policy where people submit a request to publish a paper and someone goes over the request and decides whether to let you publish, ask you to change the paper, or just prohibit it altogether at this point in time.

Q: What about choosing between a researcher career and a software engineer career?

Guy: I want again to clarify a misconception that researchers in industry are better or more senior than engineers. That is simply not the case. For example my manager is a business person and doesn't have a PhD, but I have a lot of respect for him, his experience and what he accomplished. I have no problem taking directions from a manager, regardless of whether he is a scientist, an engineer, or a business person. Similarly, I have a lot of respect to my colleagues in different job ladders. Unless you are at a place that focuses on pure research (like MSR, though that may be changing there as well), the goal of both scientists and engineers are the same: develop a great product and help the company and its customers. People in different job ladders would have different strengths but everyone works as a team to get things done. A software engineer would have really strong skill set in one area and a scientist would have a really strong skill set in another area.

Q: How did you feel your impact change when you moved from academia to Google and when you moved from Google to Amazon?

Guy: When I was in academia I felt that I had an impact — people cited my work and told me that they liked my paper and that it inspired them. I got invited to give talks and received a few awards. I felt good about myself and my impact. But when I moved to industry and saw how industry is rapidly changing the world, it put the academic impact in perspective. What I previously thought of as big impact all of a sudden became minor and elusive. It was hard to point at concrete long-lasting ripple effects that my work had on the real world (except the influence I had on my students).

When I was at Google, I was officially on sabbatical and I kept the option of going back to academia open. This resulted in some lack of focus and so my impact at Google was mostly scientific. At Amazon I officially resigned from Georgia Tech and jumped with both feet into an industry career. That changed in mindset and resulted in having a more profound product impact.

Greg: I, too, went on sabbatical to GTE Labs and I intended to come back, but afterwards decided to stay in industry. I had a good publication record and citations, but it wasn't the kind of impact that I had at Verizon or that I have here at Amazon. The impact you can have in industry is real and immediate. It's like baking bread in that you work hard and the loaf comes out and you eat it — instant gratification. In academia you submit your article, and then you wait a while and the reviews come back with revise-and-resubmit decision. Then you wait a bit and you go back and do some work, and then resubmit. Perhaps at the end it gets into the journal but by the time people read and ask me about it, I'm working on something else and sometimes I even hardly remember that paper. I remember that somebody asked me about a paper recently and I had to look it up — I forgot I even wrote it. The point is that impact in industry is real and palpable and people note it. Also, about politics: many businesses are political, but what I've seen is that they are less political than any economics or statistics department I've been in. Perhaps Guy can comment on politics in CS.

Guy: At Purdue's ECE department there was quite a bit of politics that actually shocked me as a new assistant professor. The other departments I have been at were not very political.

Q: How do you compare work life balance between industry and academia?

Greg: I think work life balance is a lot better in industry. In academia for the first 10 years people are very focused on getting tenure and then getting to full professor. If you are not working on weekends — you are not going to get promoted. It's as simple as that. At Amazon and at GTE Lab and Verizon you can organize your time so that you have more time for your family. On the other hand, we are all professionals and most of us would do the work for free. I'm the luckiest person in the world since they pay me for doing something that I love doing.

Guy: I had a different experience in that I don't think work life balance at academia has to be much worse. There are about 3 years where I was working particularly hard and I've seen my colleagues work particularly hard (years 2–4 of tenure track). In general, I was at work from 8 in the morning until 5 in the afternoon with occasional extra work later at night if needed. I do the same thing right now in industry. I do think it depends on each person and in both industry and academia there are workaholics or people who are influenced by workaholics to work very long hours.

One nice thing about academia is that work is more flexible and you can work from home (or other places) more. Also, it is really nice to have the academic flexibility during the summer semester.

Photo-Illustration: Randi Klett

Photo-Illustration: Randi Klett