Ten Lessons I wish I had been Taught (zz)

From Machine Learning to Machine Reasoning by Léon Bottou

Machine-Learning Maestro Michael Jordan on the Delusions of Big Data and Other Huge Engineering Efforts

Big-data boondoggles and brain-inspired chips are just two of the things we’re really getting wrong

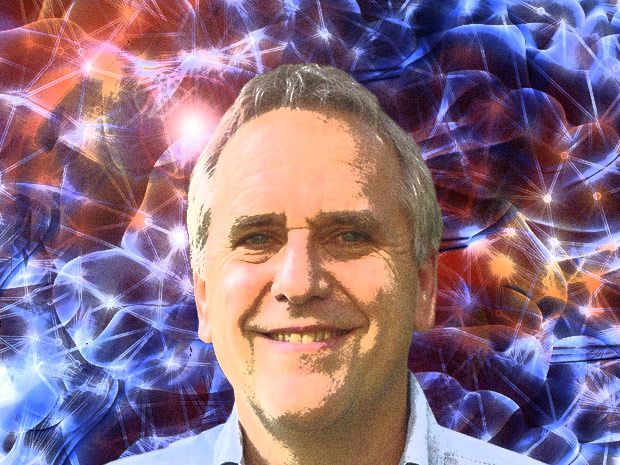

Photo-Illustration: Randi Klett

Photo-Illustration: Randi Klett The overeager adoption of big data is likely to result in catastrophes of analysis comparable to a national epidemic of collapsing bridges. Hardware designers creating chips based on the human brain are engaged in a faith-based undertaking likely to prove a fool’s errand. Despite recent claims to the contrary, we are no further along with computer vision than we were with physics when Isaac Newton sat under his apple tree.

Those may sound like the Luddite ravings of a crackpot who breached security at an IEEE conference. In fact, the opinions belong to IEEE Fellow Michael I. Jordan, Pehong Chen Distinguished Professor at the University of California, Berkeley. Jordan is one of the world’s most respected authorities on machine learning and an astute observer of the field. His CV would require its own massive database, and his standing in the field is such that he was chosen to write the introduction to the 2013 National Research Council report “Frontiers in Massive Data Analysis.” San Francisco writer Lee Gomes interviewed him for IEEE Spectrum on 3 October 2014.

Michael Jordan on…

- Why We Should Stop Using Brain Metaphors When We Talk About Computing

- Our Foggy Vision About Machine Vision

- Why Big Data Could Be a Big Fail

- What He’d Do With US $1 Billion

- How Not to Talk About the Singularity

- What He Cares About More Than Whether P = NP

- What the Turing Test Really Means

-

Why We Should Stop Using Brain Metaphors When We Talk About Computing

IEEE Spectrum: I infer from your writing that you believe there’s a lot of misinformation out there about deep learning, big data, computer vision, and the like.

Michael Jordan: Well, on all academic topics there is a lot of misinformation. The media is trying to do its best to find topics that people are going to read about. Sometimes those go beyond where the achievements actually are. Specifically on the topic of deep learning, it’s largely a rebranding of neural networks, which go back to the 1980s. They actually go back to the 1960s; it seems like every 20 years there is a new wave that involves them. In the current wave, the main success story is the convolutional neural network, but that idea was already present in the previous wave. And one of the problems with both the previous wave, that has unfortunately persisted in the current wave, is that people continue to infer that something involving neuroscience is behind it, and that deep learning is taking advantage of an understanding of how the brain processes information, learns, makes decisions, or copes with large amounts of data. And that is just patently false.

Spectrum: As a member of the media, I take exception to what you just said, because it’s very often the case that academics are desperate for people to write stories about them.

Michael Jordan: Yes, it’s a partnership.

Spectrum: It’s always been my impression that when people in computer science describe how the brain works, they are making horribly reductionist statements that you would never hear from neuroscientists. You called these “cartoon models” of the brain.

Michael Jordan: I wouldn’t want to put labels on people and say that all computer scientists work one way, or all neuroscientists work another way. But it’s true that with neuroscience, it’s going to require decades or even hundreds of years to understand the deep principles. There is progress at the very lowest levels of neuroscience. But for issues of higher cognition—how we perceive, how we remember, how we act—we have no idea how neurons are storing information, how they are computing, what the rules are, what the algorithms are, what the representations are, and the like. So we are not yet in an era in which we can be using an understanding of the brain to guide us in the construction of intelligent systems.

Spectrum: In addition to criticizing cartoon models of the brain, you actually go further and criticize the whole idea of “neural realism”—the belief that just because a particular hardware or software system shares some putative characteristic of the brain, it’s going to be more intelligent. What do you think of computer scientists who say, for example, “My system is brainlike because it is massively parallel.”

Michael Jordan: Well, these are metaphors, which can be useful. Flows and pipelines are metaphors that come out of circuits of various kinds. I think in the early 1980s, computer science was dominated by sequential architectures, by the von Neumann paradigm of a stored program that was executed sequentially, and as a consequence, there was a need to try to break out of that. And so people looked for metaphors of the highly parallel brain. And that was a useful thing.

But as the topic evolved, it was not neural realism that led to most of the progress. The algorithm that has proved the most successful for deep learning is based on a technique called back propagation. You have these layers of processing units, and you get an output from the end of the layers, and you propagate a signal backwards through the layers to change all the parameters. It’s pretty clear the brain doesn’t do something like that. This was definitely a step away from neural realism, but it led to significant progress. But people tend to lump that particular success story together with all the other attempts to build brainlike systems that haven’t been nearly as successful.

Spectrum: Another point you’ve made regarding the failure of neural realism is that there is nothing very neural about neural networks.

Michael Jordan: There are no spikes in deep-learning systems. There are no dendrites. And they have bidirectional signals that the brain doesn’t have.

We don’t know how neurons learn. Is it actually just a small change in the synaptic weight that’s responsible for learning? That’s what these artificial neural networks are doing. In the brain, we have precious little idea how learning is actually taking place.

Spectrum: I read all the time about engineers describing their new chip designs in what seems to me to be an incredible abuse of language. They talk about the “neurons” or the “synapses” on their chips. But that can’t possibly be the case; a neuron is a living, breathing cell of unbelievable complexity. Aren’t engineers appropriating the language of biology to describe structures that have nothing remotely close to the complexity of biological systems?

Michael Jordan: Well, I want to be a little careful here. I think it’s important to distinguish two areas where the word neural is currently being used.

One of them is in deep learning. And there, each “neuron” is really a cartoon. It’s a linear-weighted sum that’s passed through a nonlinearity. Anyone in electrical engineering would recognize those kinds of nonlinear systems. Calling that a neuron is clearly, at best, a shorthand. It’s really a cartoon. There is a procedure called logistic regression in statistics that dates from the 1950s, which had nothing to do with neurons but which is exactly the same little piece of architecture.

A second area involves what you were describing and is aiming to get closer to a simulation of an actual brain, or at least to a simplified model of actual neural circuitry, if I understand correctly. But the problem I see is that the research is not coupled with any understanding of what algorithmically this system might do. It’s not coupled with a learning system that takes in data and solves problems, like in vision. It’s really just a piece of architecture with the hope that someday people will discover algorithms that are useful for it. And there’s no clear reason that hope should be borne out. It is based, I believe, on faith, that if you build something like the brain, that it will become clear what it can do.

Spectrum: If you could, would you declare a ban on using the biology of the brain as a model in computation?

Michael Jordan: No. You should get inspiration from wherever you can get it. As I alluded to before, back in the 1980s, it was actually helpful to say, “Let’s move out of the sequential, von Neumann paradigm and think more about highly parallel systems.” But in this current era, where it’s clear that the detailed processing the brain is doing is not informing algorithmic process, I think it’s inappropriate to use the brain to make claims about what we’ve achieved. We don’t know how the brain processes visual information.

-

Our Foggy Vision About Machine Vision

Spectrum: You’ve used the word hype in talking about vision system research. Lately there seems to be an epidemic of stories about how computers have tackled the vision problem, and that computers have become just as good as people at vision. Do you think that’s even close to being true?

Michael Jordan: Well, humans are able to deal with cluttered scenes. They are able to deal with huge numbers of categories. They can deal with inferences about the scene: “What if I sit down on that?” “What if I put something on top of something?” These are far beyond the capability of today’s machines. Deep learning is good at certain kinds of image classification. “What object is in this scene?”

But the computational vision problem is vast. It’s like saying when that apple fell out of the tree, we understood all of physics. Yeah, we understood something more about forces and acceleration. That was important. In vision, we now have a tool that solves a certain class of problems. But to say it solves all problems is foolish.

Spectrum: How big of a class of problems in vision are we able to solve now, compared with the totality of what humans can do?

Michael Jordan: With face recognition, it’s been clear for a while now that it can be solved. Beyond faces, you can also talk about other categories of objects: “There’s a cup in the scene.” “There’s a dog in the scene.” But it’s still a hard problem to talk about many kinds of different objects in the same scene and how they relate to each other, or how a person or a robot would interact with that scene. There are many, many hard problems that are far from solved.

Spectrum: Even in facial recognition, my impression is that it still only works if you’ve got pretty clean images to begin with.

Michael Jordan: Again, it’s an engineering problem to make it better. As you will see over time, it will get better. But this business about “revolutionary” is overwrought.

-

Why Big Data Could Be a Big Fail

Spectrum: If we could turn now to the subject of big data, a theme that runs through your remarks is that there is a certain fool’s gold element to our current obsession with it. For example, you’ve predicted that society is about to experience an epidemic of false positives coming out of big-data projects.

Michael Jordan: When you have large amounts of data, your appetite for hypotheses tends to get even larger. And if it’s growing faster than the statistical strength of the data, then many of your inferences are likely to be false. They are likely to be white noise.

Spectrum: How so?

Michael Jordan: In a classical database, you have maybe a few thousand people in them. You can think of those as the rows of the database. And the columns would be the features of those people: their age, height, weight, income, et cetera.

Now, the number of combinations of these columns grows exponentially with the number of columns. So if you have many, many columns—and we do in modern databases—you’ll get up into millions and millions of attributes for each person.

Now, if I start allowing myself to look at all of the combinations of these features—if you live in Beijing, and you ride bike to work, and you work in a certain job, and are a certain age—what’s the probability you will have a certain disease or you will like my advertisement? Now I’m getting combinations of millions of attributes, and the number of such combinations is exponential; it gets to be the size of the number of atoms in the universe.

Those are the hypotheses that I’m willing to consider. And for any particular database, I will find some combination of columns that will predict perfectly any outcome, just by chance alone. If I just look at all the people who have a heart attack and compare them to all the people that don’t have a heart attack, and I’m looking for combinations of the columns that predict heart attacks, I will find all kinds of spurious combinations of columns, because there are huge numbers of them.

So it’s like having billions of monkeys typing. One of them will write Shakespeare.

Spectrum:Do you think this aspect of big data is currently underappreciated?

Michael Jordan: Definitely.

Spectrum: What are some of the things that people are promising for big data that you don’t think they will be able to deliver?

Michael Jordan: I think data analysis can deliver inferences at certain levels of quality. But we have to be clear about what levels of quality. We have to have error bars around all our predictions. That is something that’s missing in much of the current machine learning literature.

Spectrum: What will happen if people working with data don’t heed your advice?

Michael Jordan: I like to use the analogy of building bridges. If I have no principles, and I build thousands of bridges without any actual science, lots of them will fall down, and great disasters will occur.

Similarly here, if people use data and inferences they can make with the data without any concern about error bars, about heterogeneity, about noisy data, about the sampling pattern, about all the kinds of things that you have to be serious about if you’re an engineer and a statistician—then you will make lots of predictions, and there’s a good chance that you will occasionally solve some real interesting problems. But you will occasionally have some disastrously bad decisions. And you won’t know the difference a priori. You will just produce these outputs and hope for the best.

And so that’s where we are currently. A lot of people are building things hoping that they work, and sometimes they will. And in some sense, there’s nothing wrong with that; it’s exploratory. But society as a whole can’t tolerate that; we can’t just hope that these things work. Eventually, we have to give real guarantees. Civil engineers eventually learned to build bridges that were guaranteed to stand up. So with big data, it will take decades, I suspect, to get a real engineering approach, so that you can say with some assurance that you are giving out reasonable answers and are quantifying the likelihood of errors.

Spectrum: Do we currently have the tools to provide those error bars?

Michael Jordan: We are just getting this engineering science assembled. We have many ideas that come from hundreds of years of statistics and computer science. And we’re working on putting them together, making them scalable. A lot of the ideas for controlling what are called familywise errors, where I have many hypotheses and want to know my error rate, have emerged over the last 30 years. But many of them haven’t been studied computationally. It’s hard mathematics and engineering to work all this out, and it will take time.

It’s not a year or two. It will take decades to get right. We are still learning how to do big data well.

Spectrum: When you read about big data and health care, every third story seems to be about all the amazing clinical insights we’ll get almost automatically, merely by collecting data from everyone, especially in the cloud.

Michael Jordan: You can’t be completely a skeptic or completely an optimist about this. It is somewhere in the middle. But if you list all the hypotheses that come out of some analysis of data, some fraction of them will be useful. You just won’t know which fraction. So if you just grab a few of them—say, if you eat oat bran you won’t have stomach cancer or something, because the data seem to suggest that—there’s some chance you will get lucky. The data will provide some support.

But unless you’re actually doing the full-scale engineering statistical analysis to provide some error bars and quantify the errors, it’s gambling. It’s better than just gambling without data. That’s pure roulette. This is kind of partial roulette.

Spectrum: What adverse consequences might await the big-data field if we remain on the trajectory you’re describing?

Michael Jordan: The main one will be a “big-data winter.” After a bubble, when people invested and a lot of companies overpromised without providing serious analysis, it will bust. And soon, in a two- to five-year span, people will say, “The whole big-data thing came and went. It died. It was wrong.” I am predicting that. It’s what happens in these cycles when there is too much hype, i.e., assertions not based on an understanding of what the real problems are or on an understanding that solving the problems will take decades, that we will make steady progress but that we haven’t had a major leap in technical progress. And then there will be a period during which it will be very hard to get resources to do data analysis. The field will continue to go forward, because it’s real, and it’s needed. But the backlash will hurt a large number of important projects.

-

What He’d Do With $1 Billion

Spectrum: Considering the amount of money that is spent on it, the science behind serving up ads still seems incredibly primitive. I have a hobby of searching for information about silly Kickstarter projects, mostly to see how preposterous they are, and I end up getting served ads from the same companies for many months.

Michael Jordan: Well, again, it’s a spectrum. It depends on how a system has been engineered and what domain we’re talking about. In certain narrow domains, it can be very good, and in very broad domains, where the semantics are much murkier, it can be very poor. I personally find Amazon’s recommendation system for books and music to be very, very good. That’s because they have large amounts of data, and the domain is rather circumscribed. With domains like shirts or shoes, it’s murkier semantically, and they have less data, and so it’s much poorer.

There are still many problems, but the people who build these systems are hard at work on them. What we’re getting into at this point is semantics and human preferences. If I buy a refrigerator, that doesn’t show that I am interested in refrigerators in general. I’ve already bought my refrigerator, and I’m probably not likely to still be interested in them. Whereas if I buy a song by Taylor Swift, I’m more likely to buy more songs by her. That has to do with the specific semantics of singers and products and items. To get that right across the wide spectrum of human interests requires a large amount of data and a large amount of engineering.

Spectrum: You’ve said that if you had an unrestricted $1 billion grant, you would work on natural language processing. What would you do that Google isn’t doing with Google Translate?

Michael Jordan: I am sure that Google is doing everything I would do. But I don’t think Google Translate, which involves machine translation, is the only language problem. Another example of a good language problem is question answering, like “What’s the second-biggest city in California that is not near a river?” If I typed that sentence into Google currently, I’m not likely to get a useful response.

Spectrum:So are you saying that for a billion dollars, you could, at least as far as natural language is concerned, solve the problem of generalized knowledge and end up with the big enchilada of AI: machines that think like people?

Michael Jordan: So you’d want to carve off a smaller problem that is not about everything, but which nonetheless allows you to make progress. That’s what we do in research. I might take a specific domain. In fact, we worked on question-answering in geography. That would allow me to focus on certain kinds of relationships and certain kinds of data, but not everything in the world.

Spectrum: So to make advances in question answering, will you need to constrain them to a specific domain?

Michael Jordan: It’s an empirical question about how much progress you could make. It has to do with how much data is available in these domains. How much you could pay people to actually start to write down some of those things they knew about these domains. How many labels you have.

Spectrum: It seems disappointing that even with a billion dollars, we still might end up with a system that isn’t generalized, but that only works in just one domain.

Michael Jordan: That’s typically how each of these technologies has evolved. We talked about vision earlier. The earliest vision systems were face-recognition systems. That’s domain bound. But that’s where we started to see some early progress and had a sense that things might work. Similarly with speech, the earliest progress was on single detached words. And then slowly, it started to get to be where you could do whole sentences. It’s always that kind of progression, from something circumscribed to something less and less so.

Spectrum: Why do we even need better question-answering? Doesn’t Google work well enough as it is?

Michael Jordan: Google has a very strong natural language group working on exactly this, because they recognize that they are very poor at certain kinds of queries. For example, using the word not. Humans want to use the word not. For example, “Give me a city that is not near a river.” In the current Google search engine, that’s not treated very well.

-

How Not to Talk About the Singularity

Spectrum: Turning now to some other topics, if you were talking to someone in Silicon Valley, and they said to you, “You know, Professor Jordan, I’m a really big believer in the singularity,” would your opinion of them go up or down?

Michael Jordan: I luckily never run into such people.

Spectrum: Oh, come on.

Michael Jordan: I really don’t. I live in an intellectual shell of engineers and mathematicians.

Spectrum: But if you did encounter someone like that, what would you do?

Michael Jordan: I would take off my academic hat, and I would just act like a human being thinking about what’s going to happen in a few decades, and I would be entertained just like when I read science fiction. It doesn’t inform anything I do academically.

Spectrum: Okay, but knowing what you do academically, what do you think about it?

Michael Jordan: My understanding is that it’s not an academic discipline. Rather, it’s partly philosophy about how society changes, how individuals change, and it’s partly literature, like science fiction, thinking through the consequences of a technology change. But they don’t produce algorithmic ideas as far as I can tell, because I don’t ever see them, that inform us about how to make technological progress.

-

What He Cares About More Than Whether P = NP

Spectrum: Do you have a guess about whether P = NP? Do you care?

Michael Jordan: I tend to be not so worried about the difference between polynomial and exponential. I’m more interested in low-degree polynomial—linear time, linear space. P versus NP has to do with categorization of algorithms as being polynomial, which means they are tractable and exponential, which means they’re not.

I think most people would agree that probably P is not equal to NP. As a piece of mathematics, it’s very interesting to know. But it’s not a hard and sharp distinction. There are many exponential time algorithms that, partly because of the growth of modern computers, are still viable in certain circumscribed domains. And moreover, for the largest problems, polynomial is not enough. Polynomial just means that it grows at a certain superlinear rate, like quadric or cubic. But it really needs to grow linearly. So if you get five more data points, you need five more amounts of processing. Or even sublinearly, like logarithmic. As I get 100 new data points, it grows by two; if I get 1,000, it grows by three.

That’s the ideal. Those are the kinds of algorithms we have to focus on. And that is very far away from the P versus NP issue. It’s a very important and interesting intellectual question, but it doesn’t inform that much about what we work on.

Spectrum: Same question about quantum computing.

Michael Jordan: I am curious about all these things academically. It’s real. It’s interesting. It doesn’t really have an impact on my area of research.

-

What the Turing Test Really Means

Spectrum: Will a machine pass the Turing test in your lifetime?

Michael Jordan: I think you will get a slow accumulation of capabilities, including in domains like speech and vision and natural language. There will probably not ever be a single moment in which we would want to say, “There is now a new intelligent entity in the universe.” I think that systems like Google already provide a certain level of artificial intelligence.

Spectrum: They are definitely useful, but they would never be confused with being a human being.

Michael Jordan: No, they wouldn’t be. I don’t think most of us think the Turing test is a very clear demarcation. Rather, we all know intelligence when we see it, and it emerges slowly in all the devices around us. It doesn’t have to be embodied in a single entity. I can just notice that the infrastructure around me got more intelligent. All of us are noticing that all of the time.

Spectrum: When you say “intelligent,” are you just using it as a synonym for “useful”?

Michael Jordan: Yes. What our generation finds surprising—that a computer recognizes our needs and wants and desires, in some ways—our children find less surprising, and our children’s children will find even less surprising. It will just be assumed that the environment around us is adaptive; it’s predictive; it’s robust. That will include the ability to interact with your environment in natural language. At some point, you’ll be surprised by being able to have a natural conversation with your environment. Right now we can sort of do that, within very limited domains. We can access our bank accounts, for example. They are very, very primitive. But as time goes on, we will see those things get more subtle, more robust, more broad. As some point, we’ll say, “Wow, that’s very different when I was a kid.” The Turing test has helped get the field started, but in the end, it will be sort of like Groundhog Day—a media event, but something that’s not really important.

About the Author

Lee Gomes, a former Wall Street Journal reporter, has been covering Silicon Valley for more than two decades.

What is life about?

練習進入專注的瞬間 – “The Zone” zz

在日本出差的的某個周末清晨,準備去海邊走走的我站在公車站牌旁,街上空蕩蕩的,手機不能上網,只好離線放空。一會兒,轉角處有一位日本老紳士走過來,行李箱拖在人行道上,劃破了飄在空氣裡的寧靜。老紳士走到公車站,收起行李支架,鋁合金的支架碰撞出清脆聲響。他從懷裡拿出手提包,劃開拉鍊,抽出車票,輕輕吐了口氣。

忽然我意識到,這幾個動作竟然伴隨著如此多的音效。

要不是在旅行途中,感官變得比平常敏銳,又剛好一時沒事做,否則我根本不會注意到這些細節。

兩種不同意義的專注

訪問十位成功人士關於成功的要訣,恐怕會聽到十一次「專注」,因為有人為了強調會多說一次。專注分成兩種,一種是專注於某項目標,就像〈釣大魚還是小魚〉裡提到的。另一種則是這次想和大家分享的——瞬間的專注。第一項專注需要充分的自我理解,比較接近於「理性的專注」。第二項專注,要求在一段時間內完全投入一項任務,它很難靠理性強迫,一個不小心,左手拇指跟無名指自動按下 alt + Tab 就會切換到臉書或 LINE。我認為這樣的專注更近似於一種修行,是「非理性的專注」。

非理性的專注在現代成了種奢侈。

當科技替人們帶來越來越多便利的同時,也在專注力的河堤上挖下了一道道溝渠,分散原本該流向目標的專注。我們將自己變成多核心的處理器,習慣同時處理多項工作,還有某些永遠開啟的常駐程式分散我們的注意力。有個很簡單的測試方法:閉上眼睛,試著什麼都不要思考。許多人可能會立刻發現這是很困難的,各式各樣的念頭,不斷在腦海中此起彼落,彷彿閉上眼後在流竄在眼皮底下的各色光影,永遠沒有歇止的一刻。

via ben-benjamin

提升工作效率

旅行時我常覺得,抵達目的地的時間,總是比將各段交通時間加起來要長得許多。有幾次我仔細算了一下,發現其實是因為在計算時,我忽略了轉車、候車的零碎時間,而這些零碎的片段,最後卻吃掉了不容小覷的時間。工作上或許也如此,一整天下來,我們有多少時間在「轉車」、「候車」,真正專注在執行任務的時間恐怕不到一半。

更糟糕的是,旅行時我們很清楚自己是站在月台上,還是坐在車裡,但工作時,我們恐怕不清楚自己是處於認真工作的狀態,還是老早就分神了。用處理雜事的心態去面對工作不僅事倍功半,當兩種心態不斷交替時,專注的時間將被切得破碎。

破碎時間的傷害,遠超出我們的想像。

以前大學課堂上,有位教授曾說過

時間運用與產生的成果不是線性分布,而是指數分布。

用比較不數學的白話來舉例就是:一星期七天每天投入十分鐘;和一個上午連續投入一小時又十分鐘,後者得到的成果將遠比前者多上許多。

當然,不是每一件事都這樣,例如背單字這種記憶性的工作,每天十分鐘可能還比較有效。但倘若面對的是需要高度腦力的創造性工作,或是得解決困難的問題時,一次長時間的投入,才能看出成果。如同救援投手登板,得先在牛棚暖身,登上投手丘前試投幾球,才會漸漸進入狀況。人的意志力,也遵守運動定律,必須要先突破最大靜摩擦力才能開始運轉。破碎的時間連暖身都不夠,立刻又結束,對於解決困難的問題一點意義都沒有。但相對的,只要能持續專注,便能在暖機完成後,大幅提升工作效率。

作家侯文詠曾在臉書上寫過,跑馬拉松時,他常常想在最後幾公里停下來。但教練卻跟他說,跑者不僅不能在這時候放棄,還應該好好珍惜最後的幾公里,因為這幾公里的感覺、鍛鍊效果,是得先跑上幾十公里後才能擁有的,遠比一開始跑的那幾公里要珍貴許多。同樣道理,專注一份工作,絕對不是拔掉網路線,拍拍自己變胖的臉頰說「好開始工作了」就能做到的。

那是得經過一段時間醞釀、沉澱、專注投入後,才能進入的階段。

看見更多細節

專注不僅能提升做事情的效率,還可以讓人察覺平常會忽略的細節。

和人對話時,要是不想著怎麼接話,不玩手機,不偷聽隔壁桌情侶調情,將全部心思放在對方身上。假如做到了,你會頓時發現,他的臉部表情、舉手投足、語氣上下,時時刻刻都在變化,全都有關聯。這些細節,因為平常我們不夠專注便忽略了。有趣的是,察覺到這些細節的同時,又會錯過當下正在發生的細節。

因為此時我們已經分心在咀嚼方才獲得的細節。真正的專注就像一根針,只能凝聚在一個瞬間,連獲得些甚麼,都得暫時擺在一旁。

via joeduty

應用在工作上,有些人看起來個性散漫,但工作時能看見別人沒注意到的細節,這不是他故意裝模作樣,或是《金田一少年之事件簿》看太多想學金田一,是因為他懂得如何瞬間專注。這就像運動員之間流傳的「The Zone」

身處在這領域裡,任何事物都變成慢動作,再複雜的事情都變得清楚,一切的一切攤開在眼前,鉅細靡遺。這一切,都是瞬間專注帶來的。

鍛鍊專注力

要達到專注可能有一些技巧。好比說一件得重複執行的任務(例如校稿),第一次會最專心,之後專注力便會逐漸衰退。要是手邊有其他未完成的、會令人心煩的瑣事,比方說該回沒回的 E-mail,也會妨礙專注。環境影響很大,有些人適合絕對安靜的環境,適合一點背景聲音的人,則可以透過如 Coffeeitivtiy 的輔助。還有一些個人的方法和小習慣,有人會先吃點東西,有人習慣運動完、洗過澡後再開始,就我來說,我會先去洗眼鏡,因為開始專注時,我總是會先看見眼鏡上的灰塵,然後覺得它們很礙眼。

但最重要的還是練習。

via Ed Yourdon

鍛鍊專注就像瘦小腹一樣沒有捷徑,只能持之以恆、天天看鄭多燕。或許,我們可先從 20 分鐘開始,每天早中晚各挑 20 分鐘,隔絕外界聯繫,進入飛航模式,專注投入做一件事。習慣後再慢慢延長時間,每周增加 10 分鐘。如此一來,一個月後,妳每天就有三個小時,能擁有大多數現代人遺失、但卻是最珍貴的專注能力。

人格魅力 zz

3.人格魅力,是一个很宽泛的概念,有人“十年饮冰,难凉热血”,有人”苟利国家生死以,岂因祸福避趋之“,有人”他年若遂凌云志,敢笑黄巢不丈夫?“这些人,也许他并不沉稳有趣,并不心细如发,不过,他们的人格魅力却丝毫不会有所减少,总之,活的真实而富有情趣,于人有利,于己有责,大概就很好了吧。

------------------------

豆瓣已经有很赞的答案了:

一:沉稳

(1)不要随便显露你的情绪。

(2)不要逢人就诉说你的困难和遭遇。

(3)在征询别人的意见之前,自己先思考,但不要先讲。

(4)不要一有机会就唠叨你的不满。

(5)重要的决定尽量有别人商量,最好隔一天再发布。

(6)讲话不要有任何的慌张,走路也是。

二:细心

(1)对身边发生的事情,常思考它们的因果关系。

(2)对做不到位的执行问题,要发掘它们的根本症结。

(3)对习以为常的做事方法,要有改进或优化的建议。

(4)做什么事情都要养成有条不紊和井然有序的习惯。

(5)经常去找几个别人看不出来的毛病或弊端。

(6)自己要随时随地对有所不足的地方补位。

三:胆识

(1)不要常用缺乏自信的词句

(2)不要常常反悔,轻易推翻已经决定的事。

(3)在众人争执不休时,不要没有主见。

(4)整体氛围低落时,你要乐观、阳光。

(5)做任何事情都要用心,因为有人在看着你。

(6)事情不顺的时候,歇口气,重新寻找突破口,就结束也要干净利落。

四:大度

(1)不要刻意把有可能是伙伴的人变成对手。

(2)对别人的小过失、小错误不要斤斤计较。

(3)在金钱上要大方,学习三施(财施、法施、无畏施)

(4)不要有权力的傲慢和知识的偏见。

(5)任何成果和成就都应和别人分享。

(6)必须有人牺牲或奉献的时候,自己走在前面。

五:诚信

(1)做不到的事情不要说,说了就努力做到。

(2)虚的口号或标语不要常挂嘴上。

(3)针对客户提出的“不诚信”问题,拿出改善的方法。

(4)停止一切“不道德”的手段。

(5)耍弄小聪明,要不得!

(6)计算一下产品或服务的诚信代价,那就是品牌成本。

六:担当

(1)检讨任何过失的时候,先从自身或自己人开始反省。

(2)事项结束后,先审查过错,再列述功劳。

(3)认错从上级开始,表功从下级启动

(4)着手一个计划,先将权责界定清楚,而且分配得当。

(5)对“怕事”的人或组织要挑明了说

怎样提高自己的人格魅力? zz

我看过一场演唱会,开演唱会的称之为歌手A吧。她请了三个嘉宾,歌手B、演员C、歌手D。

歌手A自己唱了一个多小时,中间插了几次talking,女王气场十足,讲话风趣幽默。然后请上歌手B。两个人各自坐下来,开始弹唱聊天。如果按在大陆的知名度算,B可能还比A更有名些。但B全程微笑,态度谦和,各种赞扬歌手A,弹吉他给A伴奏。

这时演员C出现了,显得有点仓促,她解释说因为刚从片场下来,所以晚到了,然后各种“嘤嘤嘤讨厌啦”,小撒娇小羞涩。她来了以后,一起加入聊天,话题就转移到了她的身上,看得出来在平时的私交中,她应该也是小公主的角色。

在B、C都已经退场以后,D才出现,而且她是从观众席后面进来的。这个场地是livehouse,所有人都挤在一起站着。A邀请她上台,她说她是来预祝A新专辑大卖的,就不上来了,希望观众们和她一起唱一首歌献给A。然后她爆出今天其实是A的生日,全场观众齐唱了祝你生日快乐,气氛很温馨感动。唱完D就say goodbye了,我因为站得比较靠前,甚至都没看见她的真容。

不谈演唱会的效果,单从人格魅力方面排个名,我认为是:B>A>D>C。

C来晚不说,还喧宾夺主,扭扭捏捏,一度引起气氛尴尬;D的选择就明智得多,既然来晚了,干脆就借机鼓动全场大合唱,煽动气氛;A作为主角,镇住全场当仁不让;B自己也很红,但作为嘉宾甘当绿叶,衬托主角,我认为更胜一筹。

说上面这些是为了说明,人格魅力是要看场合的。在一个圈子里最牛的人,到了另一个圈子可能就只是小透明,找准自己的定位,再分析采取什么样的策略。

我在另一个答案怎样塑造自身的人格魅力?中,给“人格魅力”下了个我自己的定义:

【人格魅力】归根结底就是一个人能提供的【正能量的输出】的总和。

所谓的人格魅力固然来自内心的强大和充实,但我想强调的是“输出”二字。就算内在一时还达不到,但“输出”却可以通过外在的表现来弥补。

人格魅力(外在输出)×能力(内在积淀)=一个人的总能量。

在大多数情况下,前者略微大于后者,是比较理想的搭配。

也就是说,你表现出来的所谓的“脾气”,不要超过你所谓的“本事”。

所以说。如果你是小透明,就安静地做一枚小透明。假如你处在一群大牛、或者是一群和你不同领域的人之中,在专业方面没什么可跟人比的,这时你唯一拿得出手的正能量就是“谦虚”、“随和”、“温暖”、“周到”这些相对不需要“干货”的特质了。请不要刷存在感,也不要碍手碍脚。在需要你的地方,默默做好自己的事情就够了。

就像她一样。认真做事、不浮夸的人是会赢得尊重的。

那好,现在知道什么时候该低调了。那在大多数情况下,我们身处的圈子,和我们本人没有太大的差距,大家的level比较平均。在这种情况下,也想提高个人魅力、脱颖而出怎么办?固然,人格魅力归根结底来源于自身的修养,但恰当地表现也非常重要。

可能在生活中,我们很难长期观察一个人的为人处事,归纳TA的人格魅力是如何展现的;但在影视作品里就不一样了。在有限的篇幅中,创作者会用最简练的手法让你确信,这个人是个人格魅力很强的人。

为什么我们觉得他有人格魅力?他机智,幽默,冷静,乐观,在黑色的集中营中,能给孩子创造一个美好的童话。在死亡降临的时候,还能从容地面对。

为什么我们觉得他有人格魅力?他亦正亦邪,表面傲慢,内心有爱,关键时刻坚持原则。

为什么我们觉得她有人格魅力?她敢于挑战世俗,目标明确且不择手段,敢爱敢恨,坚韧不拔。否则,同样富有人格魅力的白瑞德也不会奋不顾身地爱上她。

他们有个共同的特征:反差。

不管是与大环境的反差,还是与自身的反差,这种反差是令人脱颖而出的关键。当然,是正面、积极的反差。

与大环境的反差:学生组织选干部,如果一群学生都是乳臭未干,吵吵闹闹,长篇大论讲述自己的丰功伟绩,表明自己的远大理想和为组织奋斗终生的目标,那么如果有一个人不卑不亢,温文尔雅,对别人表现出极大的谦让和尊重,那么这个人就很容易被认为有人格魅力。

与自身的反差:你要去采访一位科学家,他搞的研究都是很枯燥的那种,你以为他本人一定非常乏味,结果一见面,对方其实很开朗有趣,妙语连珠,笑声不断,在潜心学术的同时也是个很热爱生活的人,那么你就会觉得对方很有人格魅力。

人类的人格是由很多元素组成的,反差之所以会让人认为有魅力,是因为反差塑造出一种丰富、立体的人格结构。就像一颗每个面都有不同光泽的宝石,一眼看去无法窥其全部,就显得很有魅力。

所以,如果你想提升自己的人格魅力,可以从我上面说的“场合”和“反差”两个角度入手。当你处于一个场合当中,先迅速地判断一下他人的特点,找到自己的定位;然后,有选择性地输出你性格中可以制造“反差”的部分。

当然这一切都建立在你有干货的基础上,我给人格魅力的定义是正能量的输出,不管是什么方面的正能量,你首先得有正能量。至于如何增加干货,就不赘述了。